The Art of Model Packaging: A Guide to Deploying Machine Learning Models

The Art of Model Packaging: A Guide to Deploying Machine Learning Models

Machine learning models are powerful tools that can help businesses make data-driven decisions, optimize processes, and gain competitive advantages. However, building a successful machine learning model is only one part of the process. Deploying and packaging these models effectively is equally important to ensure their seamless integration into production systems. In this blog post, we will explore the best practices and tools for packaging machine learning models for deployment.

Why Model Packaging Matters?

Model packaging is the process of preparing a machine learning model for deployment so that it can be easily integrated into an application or system. Proper model packaging ensures that the model can be run consistently in different environments, making it easier for developers to leverage its predictive capabilities without worrying about compatibility issues.

Best Practices for Model Packaging

1. Use Containerization: Containerization technologies like Docker are ideal for packaging machine learning models along with their dependencies. They provide a lightweight and reproducible environment for running models in various deployment scenarios.

2. Version Control: Keep track of different versions of your model and its packaging to facilitate collaboration, debugging, and rollback in case of issues. Tools like Git can help manage versions effectively.

3. Include Documentation: Documenting the model’s input and output specifications, dependencies, and any preprocessing steps can make it easier for others to understand and use the model in production.

Tools for Model Packaging

1. Docker: Docker is a popular containerization platform that allows you to package your model along with its dependencies into a portable container.

2. TensorFlow Serving: If you are working with TensorFlow models, TensorFlow Serving provides a convenient way to deploy machine learning models in production environments.

3. ONNX Runtime: ONNX Runtime is an open-source, high-performance scoring engine for Open Neural Network Exchange (ONNX) models, which can be beneficial for deploying models across different platforms.

Conclusion

Model packaging is a crucial step in the machine learning lifecycle that ensures the successful deployment of models into production systems. By following best practices, utilizing containerization technologies, and leveraging tools specifically designed for model deployment, data scientists and developers can streamline the process of packaging machine learning models and maximize their impact on business operations.

-

01

01Packaging Machinery: Beyond Sealing, Driving an Efficient, Smart, and Sustainable Future

21-01-2026 -

02

02Automatic Tray Loading and Packaging Equipment: Boost Efficiency to 160 Bags/Minute

21-11-2025 -

03

03Automatic Soap Packaging Machine: Boost Productivity with 99% Qualification Rate

21-11-2025 -

04

04A Deep Dive into Automatic Toast Processing and Packaging System

18-11-2025 -

05

05The Future of Bakery Production: Automated Toast Processing and Packaging System

18-11-2025 -

06

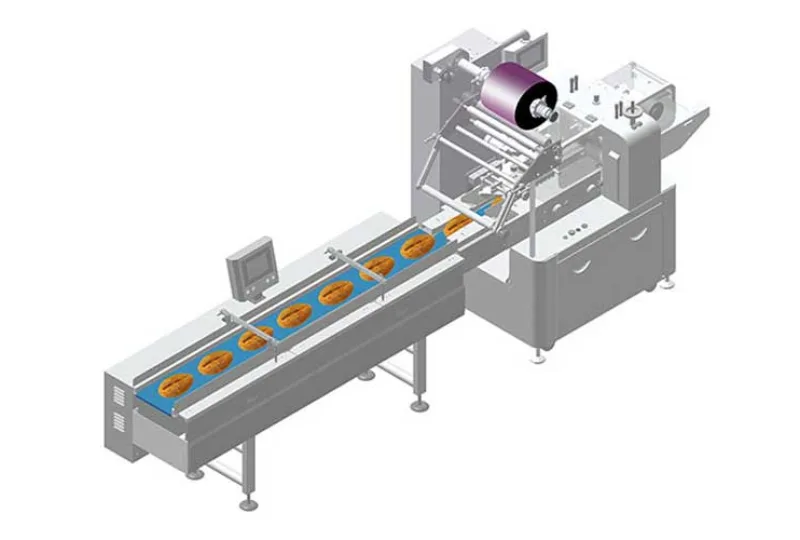

06Reliable Food Packaging Solutions with China Bread, Candy, and Biscuit Machines

11-10-2025 -

07

07High-Performance Automated Food Packaging Equipment for Modern Production

11-10-2025 -

08

08Reliable Pillow Packing Machines for Efficient Packaging Operations

11-10-2025 -

09

09Advanced Fully Automatic Packaging Solutions for Efficient Production

11-10-2025 -

10

10Efficient Automatic Food Packaging Solutions for Modern Production

11-10-2025